Definition of JITTER in Network Encyclopedia.

What is Jitter?

Jitter is distortion in a transmission that occurs when a signal drifts from its reference position. Jitter can be caused by variations in the timing or the phase of the signal in an analog or digital transmission line.

Jitter typically results in a loss of data because of synchronization problems between the transmitting stations, especially in high-speed transmissions.

Jitter is inherent in all forms of communication because of the finite response time of electrical circuitry to the rise and fall of signal voltages.

An ideal digital signal would have instantaneous rises and falls in voltages and would appear as a square wave on an oscilloscope.

The actual output of a digital signaling device has finite rise and fall times and appears rounded when displayed on the oscilloscope, which can result in phase variation that causes loss of synchronization between communicating devices.

The goal in designing a transmission device is to ensure that the jitter remains within a range that is too small to cause appreciable data loss.

Jitter Examples

Sampling jitter

In analog-to-digital and digital-to-analog conversion of signals, the sampling is normally assumed to be periodic with a fixed period – the time between every two samples is the same. If there is jitter present on the clock signal to the analog-to-digital converter or a digital-to-analog converter, the time between samples varies and instantaneous signal error arises. The error is proportional to the slew rate of the desired signal and the absolute value of the clock error. The effect of jitter on the signal depends on the nature of the jitter. Random jitter tends to add broadband noise while periodic jitter tends to add errant spectral components, “birdys”. In some conditions, less than a nanosecond of jitter can reduce the effective bit resolution of a converter with a Nyquist frequency of 22 kHz to 14 bits.

Sampling jitter is an important consideration in high-frequency signal conversion, or where the clock signal is especially prone to interference.

Packet jitter in computer networks

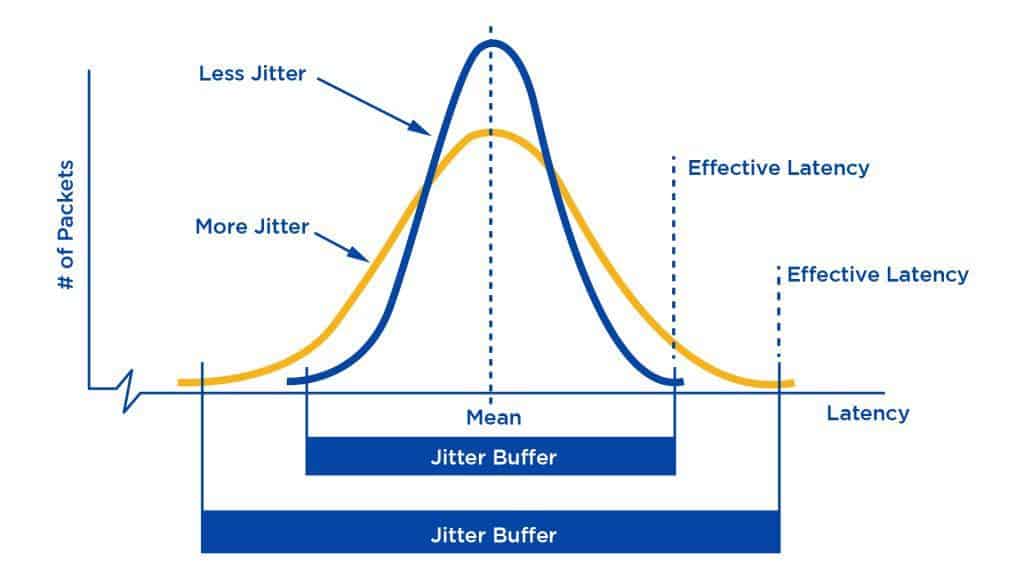

In the context of computer networks, packet jitter or packet delay variation (PDV) is the variation in latency as measured in the variability over time of the end-to-end delay across a network. A network with constant delay has no packet jitter. Packet jitter is expressed as an average of the deviation from the network mean delay. PDV is an important quality of service factor in assessment of network performance.

Transmitting a burst of traffic at a high rate followed by an interval or period of lower or zero rate transmission, may also be seen as a form of jitter, as it represents a deviation from the average transmission rate. However, unlike the jitter caused by variation in latency, transmitting in bursts may be seen a desirable feature, e.g. in variable bitrate transmissions.

Video and image jitter

Video or image jitter occurs when the horizontal lines of video image frames are randomly displaced due to the corruption of synchronization signals or electromagnetic interference during video transmission. Model based dejittering study has been carried out under the framework of digital image/video restoration.