Load balancing in computer networking is a critical technique used to distribute incoming network traffic across multiple servers or backend systems. This ensures not only efficient utilization of server resources but also high availability and reliability. The objective of this article is to provide a comprehensive overview of load balancing—what it is, why it’s essential, how it works, and the types of algorithms that power it. We’ll also look at the tangible benefits that load balancing brings to businesses and end-users alike.

In this article:

- What is Load Balancing?

- Network Load Balancing (NLB) feature in Windows Server 2016

- Load Balancing Algorithms

- Benefits of Load Balancing

- Challenges and Considerations in Load Balancing

- References

1. What is Load Balancing?

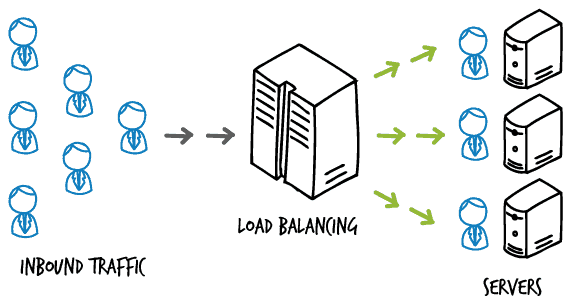

Load balancing is the process of efficiently distributing incoming network traffic across a pool of servers, ensuring that no single server is overwhelmed with too much demand. This is crucial for maintaining high availability and reliability of services. The servers in this context can be physical machines, virtual machines, or a mix of both. These servers collectively are often referred to as a “server farm” or “server pool.”

At the most fundamental level, load balancing improves the distribution of workloads across multiple computing resources. This results in optimized resource use, maximized throughput, minimized response time, and prevention of overload on any single resource. Load balancing is typically a function of the Application Layer of the OSI model, but it can also be performed at other layers.

The load balancer sits between the client and the server farm and routes client requests to the most appropriate server. To decide which server should handle a request, the load balancer uses a variety of algorithms, such as Round Robin, Least Connections, or IP Hashing. These algorithms are designed to improve performance and enhance the user experience.

Historical Background

The concept of load balancing has been around since the early days of computing, but it gained prominence with the growth of the Internet and the resulting increase in web traffic. Initially, the main goal was to distribute incoming requests to avoid server failures effectively. However, as technology evolved, so did the role and sophistication of load balancing.

In the 1990s, hardware-based load balancing solutions were common, but they were costly and less flexible. The advent of cloud computing and virtualization in the 2000s led to more software-defined load balancing solutions. These were not only cost-effective but also offered greater flexibility and easier scaling options. The development of Application Delivery Controllers (ADCs) took load balancing a step further by adding additional features like SSL offloading, application acceleration, and web application firewall capabilities.

Today, load balancing is an essential component of any enterprise-level or cloud-native application architecture. Whether it’s balancing load across multiple data centers or within a single cloud environment, the principles remain the same: distribute, optimize, and ensure reliability.

2. Network Load Balancing (NLB) feature in Windows Server 2016

The Network Load Balancing (NLB) feature distributes traffic across several servers by using the TCP/IP networking protocol. By combining two or more computers that are running applications into a single virtual cluster, NLB provides reliability and performance for web servers and other mission-critical servers.

The servers in an NLB cluster are called hosts, and each host runs a separate copy of the server applications. NLB distributes incoming client requests across the hosts in the cluster. You can configure the load that is to be handled by each host. You can also add hosts dynamically to the cluster to handle increased load. NLB can also direct all traffic to a designated single host, which is called the default host.

NLB allows all of the computers in the cluster to be addressed by the same set of IP addresses, and it maintains a set of unique, dedicated IP addresses for each host. For load-balanced applications, when a host fails or goes offline, the load is automatically redistributed among the computers that are still operating. When it is ready, the offline computer can transparently rejoin the cluster and regain its share of the workload, which allows the other computers in the cluster to handle less traffic.

NLB practical applications

NLB is useful for ensuring that stateless applications, such as web servers running Internet Information Services (IIS), are available with minimal downtime, and that they are scalable (by adding additional servers as the load increases). The following sections describe how NLB supports high availability, scalability, and manageability of the clustered servers that run these applications.

High availability

A high availability system reliably provides an acceptable level of service with minimal downtime. To provide high availability, NLB includes built-in features that can automatically:

- Detect a cluster host that fails or goes offline, and then recover.

- Balance the network load when hosts are added or removed.

- Recover and redistribute the workload within ten seconds.

Scalability

Scalability is the measure of how well a computer, service, or application can grow to meet increasing performance demands. For NLB clusters, scalability is the ability to incrementally add one or more systems to an existing cluster when the overall load of the cluster exceeds its capabilities. To support scalability, you can do the following with NLB:

- Balance load requests across the NLB cluster for individual TCP/IP services.

- Support up to 32 computers in a single cluster.

- Balance multiple server load requests (from the same client or from several clients) across multiple hosts in the cluster.

- Add hosts to the NLB cluster as the load increases, without causing the cluster to fail.

- Remove hosts from the cluster when the load decreases.

- Enable high performance and low overhead through a fully pipelined implementation. Pipelining allows requests to be sent to the NLB cluster without waiting for a response to a previous request.

Manageability

To support manageability, you can do the following with NLB:

- Manage and configure multiple NLB clusters and the cluster hosts from a single computer by using NLB Manager or the Network Load Balancing (NLB) Cmdlets in Windows PowerShell.

- Specify the load balancing behavior for a single IP port or group of ports by using port management rules.

- Define different port rules for each website. If you use the same set of load-balanced servers for multiple applications or websites, port rules are based on the destination virtual IP address (using virtual clusters).

- Direct all client requests to a single host by using optional, single-host rules. NLB routes client requests to a particular host that is running specific applications.

- Block undesired network access to certain IP ports.

- Enable Internet Group Management Protocol (IGMP) support on the cluster hosts to control switch port flooding (where incoming network packets are sent to all ports on the switch) when operating in multicast mode.

- Start, stop, and control NLB actions remotely by using Windows PowerShell commands or scripts.

- View the Windows Event Log to check NLB events. NLB logs all actions and cluster changes in the event log.

Various network devices can also implement load balancing. For example, routers use load balancing when routing tables indicate that two or more routes to a destination have the same cost. This use of routers allows you to use different LAN segments more effectively, resulting in greater availability of overall network bandwidth.

3. Load Balancing Algorithms

Understanding the various algorithms used for load balancing is essential for selecting the right method for a specific network environment. The right algorithm helps achieve efficient resource utilization, optimal performance, and high availability. In this chapter, we’ll explore some of the most commonly used load balancing algorithms:

Round Robin

Round Robin is perhaps the simplest load balancing algorithm. It distributes incoming client requests in a cyclical fashion across all servers in the backend pool. Each server is chosen in turn, and the cycle repeats when the last server is reached. While easy to implement and effective for environments where all servers have similar capabilities, Round Robin doesn’t consider the current load on each server, which could lead to uneven distribution if some tasks are more resource-intensive than others.

Least Connections

The Least Connections algorithm allocates incoming requests to the server with the fewest active connections. This algorithm is more dynamic than Round Robin, as it considers the current load on each server. It’s especially useful when the servers have different processing speeds or when the network tasks are long and vary in computational complexity. The key advantage here is that it minimizes the risk of overloading a single server.

IP Hashing

IP Hashing is a more specialized form of load balancing that hashes the client’s IP address to direct the request to a specific server. This ensures that a client consistently connects to the same server, which can be useful for session persistence in applications where data caching or real-time information is crucial. However, this method could lead to uneven load distribution if a large number of requests come from a few IP addresses.

Weighted Round Robin

In Weighted Round Robin, each server is assigned a weight based on its processing capacity. Servers with higher weights receive more client requests than those with lower weights. This is a modification of the basic Round Robin algorithm and is suitable for environments where the backend servers have varied capabilities.

Weighted Least Connections

This is a variant of the Least Connections algorithm, where each server is assigned a weight. The algorithm divides the number of active connections on each server by its weight to identify which server should receive the next request. This method aims to harmonize the Least Connections strategy with the servers’ varied processing capabilities.

Source-based Hashing

Source-based Hashing uses attributes of the source request to hash and select a server. This could be based on the source IP address, or some other attribute in the header or payload. This ensures session persistence but can have the same downside as IP Hashing in terms of potentially uneven distribution.

Geographic-based

In Geographic-based load balancing, the client’s geographic location is used to direct the request to the nearest server. This is commonly used in Content Delivery Networks (CDNs) to reduce latency and improve the user experience.

These are just a few examples of load balancing algorithms. The choice of algorithm can significantly impact the efficiency, speed, and reliability of network resource allocation. Therefore, it’s crucial to understand the demands and capabilities of your specific network when choosing a load balancing algorithm.

4. Benefits of Load Balancing

Load balancing isn’t merely a strategy to distribute network load across multiple servers; it’s a critical component that significantly impacts the efficiency, reliability, and security of modern network architectures. In this chapter, let’s delve into the myriad benefits that make load balancing indispensable for any network that aspires to be robust and scalable.

Improved User Experience

One of the most immediate benefits of load balancing is the enhancement of user experience. By efficiently distributing incoming requests, load balancers reduce the time it takes to load a webpage or process a transaction, resulting in quicker response times for end-users.

Scalability

Load balancing enables organizations to handle varying levels of network load without significant investment in additional hardware or infrastructure. As demand grows, you can easily add more servers to your load-balanced pool, thereby extending your network’s ability to handle more concurrent users seamlessly.

High Availability and Reliability

Load balancing significantly reduces the likelihood of server failures. If one server fails, the load balancer quickly redirects incoming traffic to the remaining servers in the pool, ensuring uninterrupted service availability. This is particularly critical for mission-critical applications where downtime can have severe financial and operational repercussions.

Efficient Resource Utilization

Load balancing algorithms can direct traffic based on server performance and current load, ensuring the most efficient use of available resources. This is essential for maximizing ROI on hardware and infrastructure investments.

Better Data Management

Certain load balancing algorithms allow for session persistence, which is crucial for transactional applications that require real-time data consistency. Users can engage in sessions that involve multiple interactions without any disruption or data inconsistency.

Improved Fault Tolerance

Load balancing can identify unresponsive or slow servers and route incoming requests away from them. This not only ensures the high availability of applications but also provides an extra layer of security by protecting against DDoS attacks aimed at specific servers.

Ease of Management

Modern load balancers come with intuitive management interfaces that provide real-time analytics, automation features, and advanced configurations. This makes it easier for administrators to monitor network performance, troubleshoot issues, and optimize settings without manual intervention.

Geographic Load Distribution

For global services, geographic-based load balancing can route user requests to the nearest data center, reducing latency and enhancing the user experience. This is particularly beneficial for Content Delivery Networks (CDNs) and multinational enterprises.

Cost-Effectiveness

By optimizing server usage, reducing the need for additional hardware, and automating network management tasks, load balancing can significantly lower operational costs.

In summary, the advantages of load balancing go beyond merely distributing network traffic. From improving user experience to enhancing fault tolerance and reducing operational costs, load balancing is a multifaceted tool that offers comprehensive benefits for modern networking needs.

5. Challenges and Considerations in Load Balancing

While load balancing brings a multitude of advantages, it is not without its challenges and considerations. As with any technology, there are trade-offs that businesses must carefully evaluate to make the most of their load balancing strategies. In this chapter, we’ll dive into some of the critical challenges and considerations you should be aware of, covering aspects such as costs, complexity, and security risks.

Costs

One of the most evident challenges in implementing load balancing solutions is the associated cost. Hardware-based load balancers can be expensive to purchase and maintain, not to mention the costs of scaling as network demands grow. Even cloud-based load balancing services have their costs, which can vary based on usage, bandwidth, and additional features. For small and medium-sized businesses, these costs can be a significant investment.

Complexity

The deployment and ongoing management of load balancing systems introduce a new layer of complexity to network configurations. Different algorithms, routing rules, and policies must be set up correctly to ensure optimal performance. This complexity increases as you deploy more advanced features, like SSL offloading, caching, or geographic routing. Properly managing this complexity requires specialized knowledge, which means additional training for your IT team or the hiring of specialized personnel.

Security Risks

While load balancers can improve security by protecting against certain types of attacks, they are also potential targets themselves. If not correctly configured, load balancers can become the weakest link in your network security chain. For instance, they could be vulnerable to DDoS attacks or become a single point of failure if not set up with high availability in mind.

Session Persistence and Data Integrity

Some applications require that all of a user’s requests be directed to the same server for data consistency. Implementing this feature—known as session persistence—can be challenging and may not be supported by all load balancing algorithms or solutions.

Health Checks and Monitoring

To effectively reroute traffic away from failing or underperforming servers, robust health checks and monitoring systems need to be in place. These systems must be reliable and fast to avoid creating additional bottlenecks or failure points in the network.

Vendor Lock-in

When you choose a proprietary load balancing solution, you might find yourself locked into a specific vendor’s ecosystem. This can limit your flexibility to switch vendors in the future and might impose constraints on your network architecture and scalability options.

Latency and QoS

Different load balancing algorithms might impact latency and the Quality of Service (QoS) differently. Understanding how your chosen algorithm affects these factors is crucial for maintaining a high-performing network.

In conclusion, while load balancing is an indispensable component for any scalable and reliable network, it does come with its set of challenges. Understanding these challenges and planning for them is critical to making an informed decision and successfully implementing a load balancing strategy in your organization.

6. References

- Textbooks and Academic Journals

- “Computer Networking: A Top-Down Approach” by James F. Kurose and Keith W. Ross

- “High Performance Web Sites: Essential Knowledge for Front-End Engineers” by Steve Souders

- Journal of Network and Systems Management

- Vendor Documentation

- F5 BIG-IP Local Traffic Manager Documentation

- AWS Elastic Load Balancing Documentation

- Azure Load Balancer Documentation

- Online Resources

- Cisco’s Guide to Load Balancing

- NGINX Load Balancing Techniques

- Global Load Balancer

- RFC 9012 – The BGP Tunnel Encapsulation Attribute

- Standards Organizations

- IETF (Internet Engineering Task Force) Documents

- IEEE Papers

- Industry Reports

- Gartner Research Papers on Network Traffic Management

- Forrester Research on Load Balancers

- Conference Papers

- Papers from ACM SIGCOMM

- Papers from IEEE INFOCOM